However, you can easily create it and add your own configuration files. The SPARK_DIST_CLASSPATH is set to your HADOOP_CLASSPATH as output by the hadoop classpath command. When I looked for that directory, it didnt exist presumably its not part of the pip installation. To find the correct AWS S3 SDK JAR version you should go to the Maven Repository page for the hadoop-aws.jar file for the Hadoop version you're installing (in the example above this is hadoop-aws-2.8.5jar so the page is ) and look at the AWS S3 SDK JAR version in the Compile Dependencies section In this case it is aws-java-sdk-s3-1.10.6.jar. The key parts of this dockerfile are where the Pyspark package is built and installed: cd /usr/local/spark/python & \Īnd ensuring you have the correct AWS S3 SDK JAR to match your Hadoop version: RUN wget & \ '/usr/local/hadoop/share/hadoop/tools/lib/*"' > /usr/local/spark/conf/spark-env.sh '/usr/local/hadoop/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:'\ '/usr/local/hadoop/share/hadoop/yarn/*:/usr/local/hadoop/share/hadoop/mapreduce/lib/*:'\

'/usr/local/hadoop/share/hadoop/hdfs/*:/usr/local/hadoop/share/hadoop/yarn/lib/*:'\

'/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/*:'\ '/usr/local/hadoop/share/hadoop/common/lib/*:/usr/local/hadoop/share/hadoop/common/*:'\ RUN echo 'export SPARK_DIST_CLASSPATH="/usr/local/hadoop/etc/hadoop:'\ # Set SPARK_DIST_CLASSPATH in $SPARK_HOME/conf/spark-env.sh to point to Hadoop install Mv aws-java-sdk-s3-1.10.6.jar /usr/local/hadoop/share/hadoop/tools/lib/

#Where does pip install pyspark download#

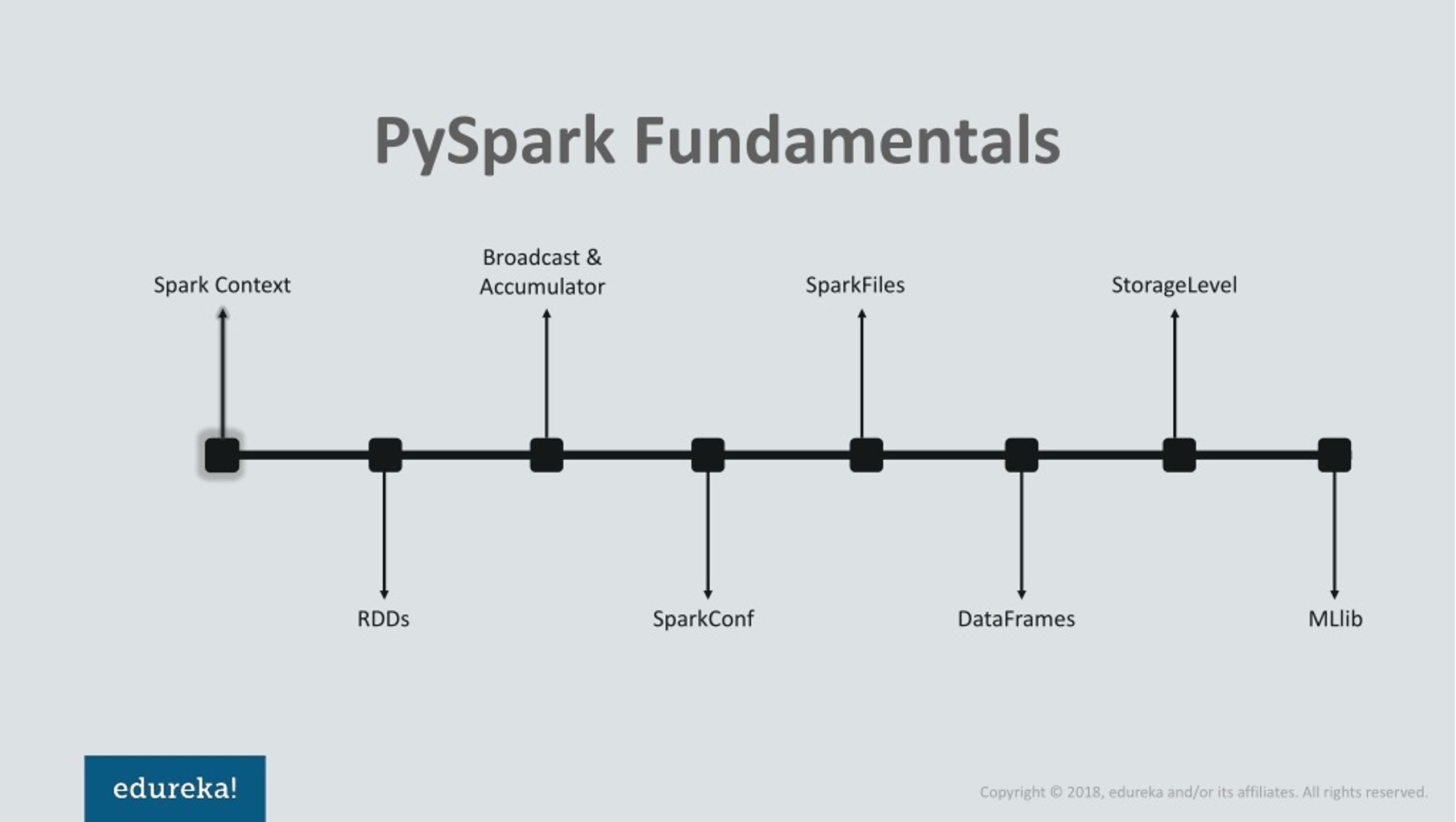

# Download correct AWS Java SDK for S3 for Hadoop version being used Ln -s /usr/local/hadoop-2.8.5 /usr/local/hadoop # Unpack and move to /usr/local and make symlink from /usr/local/hadoop to specific Hadoop version Ln -s /usr/local/spark-2.4.3 /usr/local/spark & \ Tar xzvf spark-2.4.3-bin-without-hadoop.tgz & \ # Build and install Pyspark from this download # Unpack and move to /usr/local and make symlink from /usr/local/spark to specific Spark version In order to work around this you will need to install the "no hadoop" version of Spark, build the Pyspark installation bundle from that, install it, then install the Hadoop core libraries needed and point Pyspark at those libraries.īelow is a dockerfile to do just this using Spark 2.4.3 and Hadoop 2.8.5: # The reason for this is that Pyspark is built with a specific version of Hadoop by default. This despite that fact you see to have the correct JARs in place. Py4JJavaError: An error occurred while calling o69.parquet: : Could not initialize class .s3a.S3AFileSystem at 0(Native Method)

Next, select New and add the directory where you installed PIP.

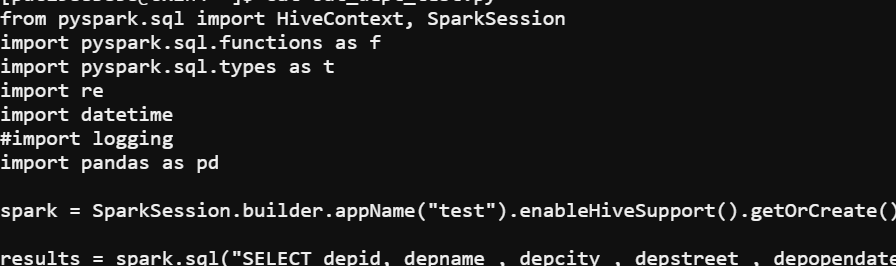

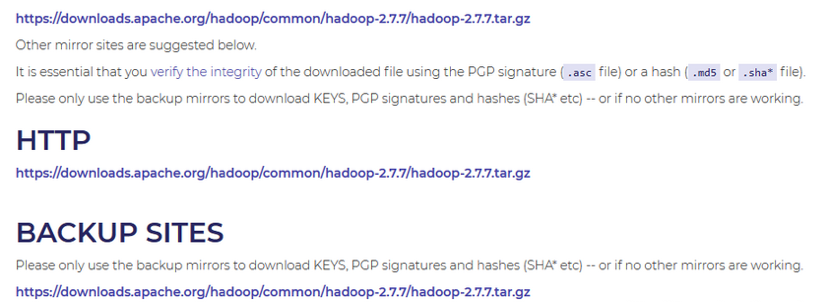

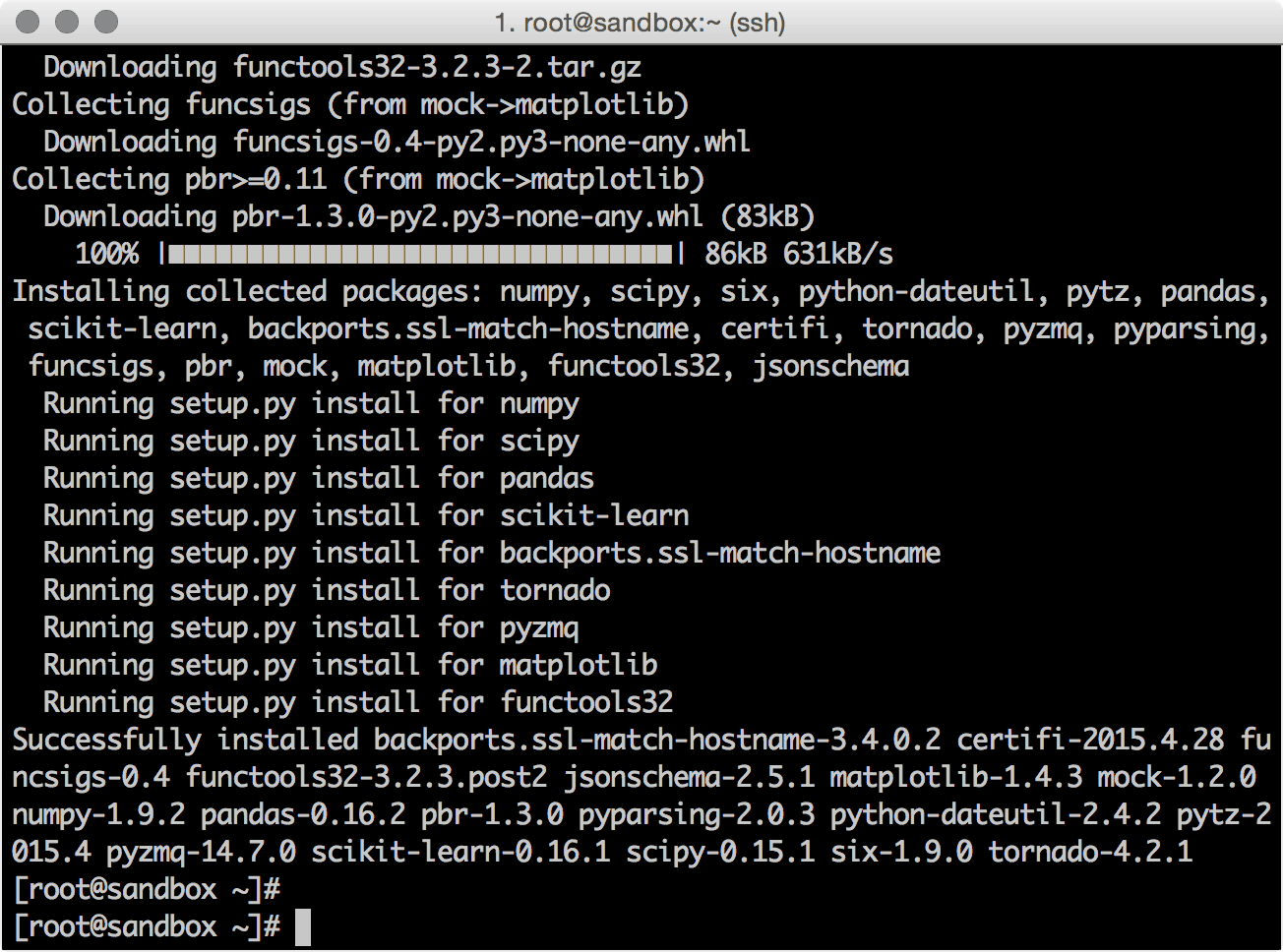

Though the other packages were notably smaller.When installing Pyspark from Pip, Anaconda or downloading from you will find that it will only work with certain versions of Hadoop (Hadoop 2.7.2 for Pyspark 2.4.3 for example.) If you are using a different version of Hadoop and try to access something like S3 from Spark you will receive errors such as Open the Environment Variables and double-click on the Path variable in the System Variables. Pip worked for all the other packages I tried to install but for this one. data from the Datbase and then convert into Pandas dataframe. pip3 install -user) but that did not help either. Saving a dataframe as a CSV file using PySpark: Step 1: Set up the. I also tried to install the package locally (e.g. Select PySpark and click Install Package. Go to File -> Settings -> Project -> Project Interpreter.

#Where does pip install pyspark full#

My system drive seems to be a bit full but it looks like there should be enough space to install the package. Once you have installed and opened P圜harm youll need to enable PySpark. I did try to use a different TMP_DIR as suggested by some StackOverflow answers like this one but that did not fix the problem in my case. Using cached torch-1.8.1-cp38-cp38-manylinux1_x86_64.whl (804.1 MB)ĮRROR: Could not install packages due to an EnvironmentError: No space left on deviceīut according to df there should be enough space around both on the system partition as well as in tmpfs.įilesystem Size Used Avail Use% Mounted on When I try to install the pytorch module for python3.8 pip complains that there is no space left on the device e.g.

0 kommentar(er)

0 kommentar(er)